This is one of those posts that is situated at the borders of LEGO SERIOUS PLAY. Namely the exercises that use LEGO bricks, but are not SERIOUS PLAY. However, one of your customers might be looking forward to those types of tasks as a facilitator, as well. So here we come.

Lego Flow Game – Described by Karl Scotland

Originally a version that has been based upon variations of MIT Beer Game, the approach was described by Karl Scotland at his website AvailAgility here. In this description, the Flow Game is described in the following manner.

This game is a co-creation, born of the blending of ideas by myself and Dr Sallyann Freudenberg. The earliest version was the Flow Experiment which I ran at SPA in 2010. Sal was in the audience that day, and liked the concept, but found the equations a little tedious and requiring too much effort, which took away form the primary learning objectives. She wasn’t the only one! She went away and reworked it using Lego rather than maths, making it much more fun, and last year (2013) we were able to run it together. The version described below is the result of some minor tweaks since then.

Overview

The Lego Flow Game is a fun exercise to compare and contrast different approaches to processes, with respect to how work flows. The aim of the game is to build Lego Advent Calendar items, with a defined workflow: finding the next advent calendar number (analysis), finding the matching set of lego pieces (supply), creating the lego item (build) and checking the item has been built correctly and robustly (accept). There are specialist roles for each stage in the workflow – analysis, suppliers, builders and acceptors – as well as an overall manager, and some market representatives. The game is run three times, each for a different type of process – batch and phase driven, time-boxed and flow-based.

Materials

As a facilitator, you’ll need to download:

- Instructional Slides

- Results Spreadsheet

Each team (consisting of at least 5 people) will need to have:

- 1 Lego Advent Calendar kit, with 24 door numbers cut individually, and the equivalent sets of 24 pieces, broken up and individually bagged.

- Blank index cards (around 50)

- 1 stapler (with staples!)

- 1 ball of BluTac

- 3 metrics sheets (1 for each round) – use the hidden slide in the deck

- 2 pens or sharpies

- Stopwatch

The Workflow

To begin, all 24 advent calendar doors should be shuffled and stacked in a single pile, and all 24 bags of Lego should be in an unsorted pile or bag. The Lego flows through the process, with roles performing their work as follows

Analyst

The Analysts needs to have the stack of shuffled doors, the index cards, a pen and the BluTac. They find the next number in the pile of doors, starting at 1 and progressing in increasing numeric order. When they find it, they write that number on the top of an index card, stick the door to the index card below the number with the instructional picture (i.e. the reverse side from the number) facing up, and then pass it to the Supplier (as defined by the policies for each round)

Supplier

Supplier

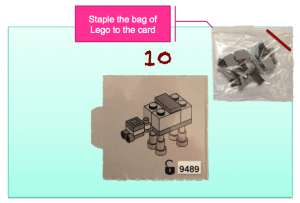

The Supplier needs access to the bags of Lego pieces, and the stapler. For each index card received from the Analyst, they find the bag of Lego that matches the picture on the card, staple the bag to the index card, and pass to the Builder (as defined by the policies for each round)

Builder

Builder

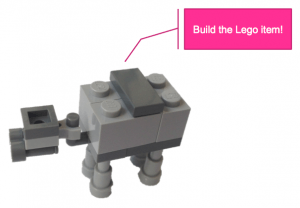

The Builder needs the index card, with the instructional picture and the corresponding bag of lego pieces attached. They build the Lego item for the pieces in the bag attached, as defined by the instructional picture on that card, and pass to the Acceptor (as defined by the policies for each round)

Acceptor

Acceptor

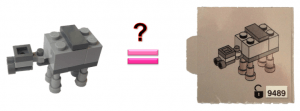

The Acceptor receives the index card with the instructional picture and the completed Lego item. They check that the Lego item exactly matches the picture on the index card. Exactly means that all heads, bodies and legs are facing in same direction and items are placed or positioned correctly. Additionally, they check for the robustness and quality of the build, such as whether all the pieces have been pushed together sufficiently. Assuming that the Lego items are correct and robust, then they can be considered Done, otherwise they are rejected. Each round will have different policies regarding what happens to rejected items.

Manager

Manager

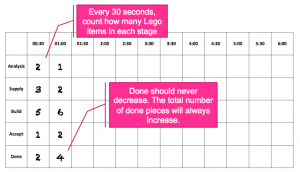

The Manager will need a stopwatch (unless the facilitator does all the timing), the metrics sheets and a pen. Their role is to keep and eye on the process and ensure that no rules or policies are being broken. They may also keep time for their team, stopping and starting the rounds and letting the group know how much time they have left. However, we tend to do this centrally as facilitators. Finally, the Manager captures metrics by counting how many items are in each stage of the process every 30 seconds, filling that number into the metrics sheets. The number of items includes all items, whether queued, being worked on, or ready for handing on to the next stage. Note that the number of items ‘Done’ is incremental so will never decrease.

Market

This role is optional, but can be useful if there are lots of observers. We have noticed that Acceptors often allow items that we would have rejected because they want their team to do well. Therefore, having a few people independent of the teams to double check the quality, may help highlight this, and generate a discussion on quality and fitness for market. Ask for some volunteers to represent the market and instruct them privately (e.g. while the first round is in progress) to check the Done items and highlight any that they consider unfit for the market. Any poor quality items should be highlighted at the end of the round.

The Rounds

Before each round, explain the particular process policies that you are putting in place, as follows:

Batch and Stage Driven (Waterfall-like)

This is a single six-minute round. Teams should be given a pre-defined number of items that has been set as the expected target (we use 5), and that this number of items (i.e. the whole batch) must be completed in each stage, before being passed in their entirety to the next stage. Thus the Analyst must find and process all 5 doors before all 5 completed index cards are passed to the Supplier, and so on. Stages are independent, with roles being specialist skills, so workers may not help each other. Any work which is sub-standard and rejected is stuck where it is – it is expected that all the right work is done to the right quality level first time.

Time-Boxed (Scrum-like)

This time, there are 3 sets of 2 minute time-boxes. Before each new time-box begins each team should estimate/plan how many items they can complete within that time-box, and at the end they should review/retrospect how they did against their estimate/plan. We find 30 seconds should be sufficient for this quick discussion. When the time-box starts, as each item is completed in a stage it may be immediately passed to the next stage – there is no need to wait for the whole batch to be completed. Primary specialisms still apply, but now teams can be more collaborative and help each other if there is nothing for them to do in their stage. Because of this teamwork, rejected work can now be passed back and improved. At the end of a time-box, if there are partially completed items they can be kept and continued to be worked during the next time-box begins. If a team completes all their estimated work, they may take further work in, but only when all the items are accepted as done. Encourage teams to keep to the spirit of Scrum by planning on working only on what they feel they can complete in 2 minutes rather than starting more work than they should knowing that they can continue it.

Flow-Based (Kanban-like)

This is another single six-minute round again. There is no need to estimate how many items can be done, but instead a WIP limit of one item per stage is introduced. This limit includes both items being worked on and those completed and ready for work in the next stage. Thus a basic pull system is created, such that only when a stage is empty may it pull a ready item from the preceding stage. For example, when a Supplier has finished their work on an item (e.g. door 3), they cannot pull and begin work on the next item from the Analyst upstream (door 4), until the Builder downstream has pulled and begun work on their completed item (door 3). As in the time-boxed round, primary specialisms still apply although team-members may help each other if there is nothing in their phase. Similarly, rejected work can be passed back.

Debriefing

After each round, gather up the metrics sheets and quickly enter the numbers in the spreadsheet. We have found it useful to have a different file per group. While the numbers are being entered, the teams can tidy up their tables ready for the next round, including returning all the advent doors back to the shuffled pile. This is so that that the Analysts job doesn’t get easier as the number of cards decreases.

Teams can also discuss what worked and what was challenging about the policies after each round. The goal is start thinking about pros and cons of different approaches rather than trying to prove one way is better than any other. At the very end, show the Cumulative Flow Diagrams and use them to also compare and contrast the different rounds with respect to flow.

Finally, if you have time, you can ask teams what other policy changes they would make to try and improve their processes and performance, and maybe even try them out and capture the metrics to see.

This game was further described in practice by Joe McGrath in his blog here.

The Flow Game in Practice

We run regular Delivery Methodology sessions for a mixture of Delivery Managers and other folk involved in running Delivery Teams. It’s the beginning of a Community of Practice around how we deliver.

One of the items that someone added to our list for discussion recently was about how we forecast effort, in order to predict delivery dates. Straight away I was thinking about how we shouldn’t necessarily be forecasting effort, as this doesn’t account for all of the time when things spend blocked, or just not being worked on.

We’d been through a lot of this before, but we have bunch of new people in the teams now, and it seemed like a good idea for a refresher. My colleague Chris Cheadle had spotted the Lego Flow Game, and we were both keen to put our Lego advent calendars to good use, so we decided to run this as an introduction to the different ways in which work can be batched and managed, and the effect that might then have on how the work flows.

The Lego Flow Game was created by Karl Scotland and Sallyann Freudenberg, and you can read all of the details of how to run it on Karl’s page. It makes sense to look at how the game works before reading about how we got on.

We ran the game as described here, but Chris adapted Karl’s slides very slightly to reflect the roles and stages involved in our delivery stream, and he tweaked the analyst role slightly so they were working from a prioritised ‘programme plan’.

Round 1 – Waterfall

Maybe we’re just really bad at building Lego, but we had to extend the time slightly to deliver anything at all in this first round! Extending the deadline, to meet a fixed scope, anyone?

The reason we only got two items into test and beyond was that the wrong kits were selected during the ‘Analysis’ phase for three items. The time we spent planning and analysing these items was essentially wasted effort, as we didn’t deliver them.

The pressure of dealing with a whole batch of work at that early stage took it’s toll. This is probably a fairly accurate reflection of trying to do a big up-front analysis under lots of pressure, and then paying the price later for not getting everything right.

It was also noticeable that because of the nature of the ‘waterfall rules’, people working on the later stages of delivery were sat idle for the majority of the round – what a waste!

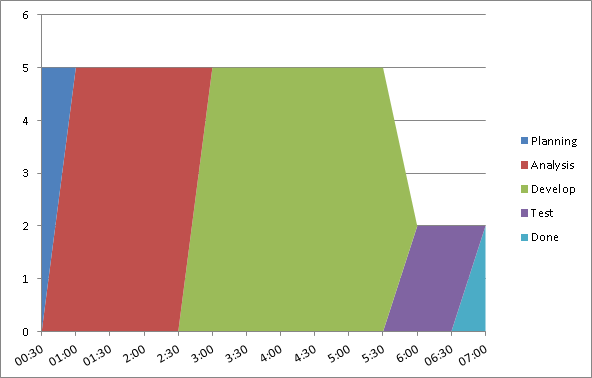

Our Cumulative Flow Diagram (CFD) for the Waterfall Round looked like this –

You can see how we only delivered two items, and these weren’t delivered until 7:00 – no early feedback from the market in this round!

CFDs are a really useful tool for monitoring workflow and showing progress. I tend to use a full CFD to examine the flow of work through a team and for spotting bottlenecks, and a trimmed down CFD without the intermediate stages (essentially a burn-up chart) for demonstrating and forecasting progress with the team and stakeholders.

You can read more about CFDs, and see loads of examples here.

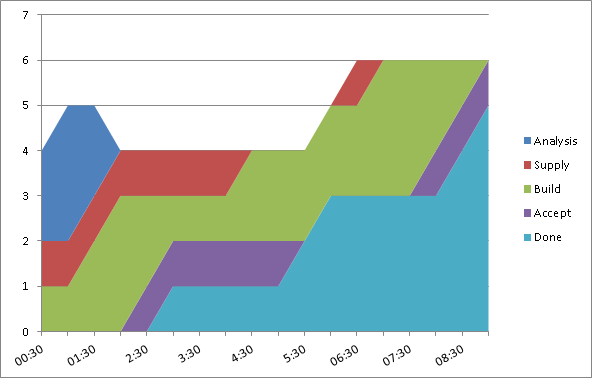

Round 2 – Time-boxed

We did three three-minute time-boxes during this round. Before we started the first time-box we estimated we’d complete three items. We only completed one – our estimation sucked!

In the second time-box we estimated we’d deliver two items and managed to deliver two, just!

Before the third time-box we discussed some improvements and estimated that we’d deliver three again. We delivered two items – almost three!

Team members were busier in this round, as items were passed through as they were ready to be worked on.

The CFD looks a bit funny as I think we still rejected items that were incorrectly analysed (although Karl’s rules say we could pass rejected work back for improvement)

The first items were delivered after 3:00 and you can the regular delivery intervals at 6:00 and 9:00, typical of a time-boxed approach.

Round 3 – Flow

During the flow round, people retained their specialisms, but each team member was very quick to help out at other stages, in order to keep the work flowing as quickly as possible.

Initially, those working in the earlier stages took a little getting used to the idea of not building up queues, but we soon got the hang of it.

The limiting of WIP to a single item in each stage forced us to swarm onto the tricky items. Everyone was busier – it ‘felt faster’.

We’ve had some success with this in our actual delivery teams – the idea of Developers helping out with testing, in order to keep queue sizes down – but I must admit it’s sometimes tricky to get an entire team into the mindset of working outside their specialisms, ‘for the good of the flow’.

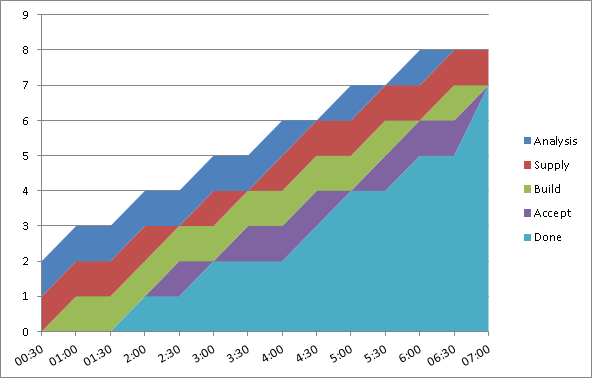

Here’s the CFD –

The total items delivered was 7, which blows away the other rounds.

You can see we were delivering items into production as early as 2:00 into the round. So not only did we deliver more in total, but we got products to market much earlier. This is so useful in real life as we can be getting early feedback, which helps us to build even better products and services.

The fastest cycle time for an individual item was 2:00

A caveat

Delivering faster in the final round could be partly down to learning and practice – I know I was getting more familiar with building some of the Lego kits.

With this in mind, it would be interesting to run the session with a group who haven’t done it before, but doing the rounds in reverse order. Or maybe have multiple groups doing the rounds in different orders.

What else did we learn

* Limiting WIP really does work. The challenge is to take that into a real setting where specialists are delivering real products.

* I’ve used other kanban simulation tools like the coin-flip game and GetKanban. This Lego Flow Game seemed to have enough complexity to make it realistic, but kept it simple enough to be able to focus on what we’re learning from the exercise.

* Identifying Lego pieces inside plastic tubs is harder than you’d think.

Overall a neat and fun exercise, to get the whole team thinking about how work flows, and how their work fits into the bigger picture of delivering a product.

Become a LEGO Serious Play facilitator - check one of the upcoming training events!

Become a LEGO Serious Play facilitator - check one of the upcoming training events!